Node Js Php Serialize Function

In this tutorial, James Kolce shows how to create a note-taking app using Hapi.js, Pug, Sequelize and SQLite. Learn to build Node.js MVC apps by example.

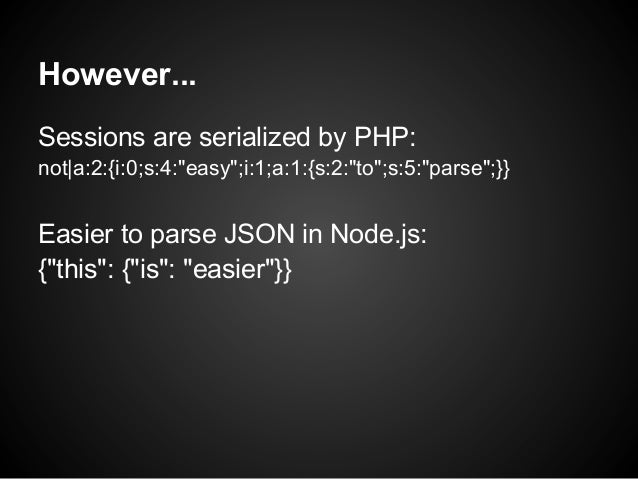

Nov 20, 2017. Node Js Php Serialize Function' title='Node Js Php Serialize Function' />In this article we will use the Newtonsoft JSON serialization library to serialize JSON data. PHP Objects Manual. Initialization, Instantiation and Instances are terms that can be confusing at first. This tutorial will help you understanding. Relational relations. Orbit.js is a standalone library for coordinating access to data sources and keeping their contents synchronized. Serialization for your Node ORM. Jaysonapi jaysonapi is a framework agnostic JSON API v1.0.0 serializer. Jaysonapi provides more of a functional approach to serializing your data.

Times are the mean number of milliseconds to complete a request across all concurrent requests. Lower is better. It’s hard to draw a conclusion from just this one graph, but this to me seems that, at this volume of connection and computation, we’re seeing times that more to do with the general execution of the languages themselves, much more so that the I/O.

Free Download Komik Trigan on this page. Note that the languages which are considered “scripting languages” (loose typing, dynamic interpretation) perform the slowest. But what happens if we increase N to 1000, still with 300 concurrent requests - the same load but 100x more hash iterations (significantly more CPU load). Times are the mean number of milliseconds to complete a request across all concurrent requests. Lower is better. All of a sudden, Node performance drops significantly, because the CPU-intensive operations in each request are blocking each other.

And interestingly enough, PHP’s performance gets much better (relative to the others) and beats Java in this test. (It’s worth noting that in PHP the SHA-256 implementation is written in C and the execution path is spending a lot more time in that loop, since we’re doing 1000 hash iterations now). Now let’s try 5000 concurrent connections (with N=1) - or as close to that as I could come. Unfortunately, for most of these environments, the failure rate was not insignificant.

For this chart, we’ll look at the total number of requests per second. 1983 Malayalam Movie Song Free Download. Dr Feelgood Down By The Jetty Rar Files there. The higher the better. Total number of requests per second.

Higher is better. And the picture looks quite different. It’s a guess, but it looks like at high connection volume the per-connection overhead involved with spawning new processes and the additional memory associated with it in PHP+Apache seems to become a dominant factor and tanks PHP’s performance.

Clearly, Go is the winner here, followed by Java, Node and finally PHP. While the factors involved with your overall throughput are many and also vary widely from application to application, the more you understand about the guts of what is going on under the hood and the tradeoffs involved, the better off you’ll be. In Summary With all of the above, it’s pretty clear that as languages have evolved, the solutions to dealing with large-scale applications that do lots of I/O have evolved with it. To be fair, both PHP and Java, despite the descriptions in this article, do have of in. But these are not as common as the approaches described above, and the attendant operational overhead of maintaining servers using such approaches would need to be taken into account. Not to mention that your code must be structured in a way that works with such environments; your “normal” PHP or Java web application usually will not run without significant modifications in such an environment. As a comparison, if we consider a few significant factors that affect performance as well as ease of use, we get this: Language Threads vs.